How to migrate data between different database systems is a crucial topic for organizations looking to optimize their data management strategies. As businesses evolve, the need to transfer data across various platforms—be it relational, NoSQL, or graph databases—grows. Understanding the landscape of database systems and the unique challenges they present will empower you to execute a successful migration.

In this guide, we will explore the different types of database systems, the techniques for data migration, and the tools that can facilitate this process. By evaluating various methods and best practices, you will be equipped to make informed decisions, ensuring data integrity and minimizing downtime during the transition.

Understanding Database Systems

Database systems are fundamental components of modern software applications, enabling the storage, retrieval, and management of data. There are several types of database systems, which serve different purposes and use cases. This understanding is crucial when considering migration of data between systems, as the unique characteristics of each database type can significantly impact performance, scalability, and functionality.Different types of database systems include relational databases, NoSQL databases, and graph databases, each designed to handle specific data structures and access patterns.

Relational databases, such as MySQL and PostgreSQL, utilize a structured query language (SQL) for defining and manipulating data. They excel at managing structured data with predefined schemas, allowing for complex queries and transactions. In contrast, NoSQL databases like MongoDB and Cassandra cater to unstructured or semi-structured data, offering flexibility in data representation and scaling horizontally across many servers. Graph databases, such as Neo4j, focus on the relationships between data points, making them ideal for applications like social networks and recommendation systems.

Types of Database Systems

Understanding the nuances between different database systems is essential for selecting the right one for your project. Here are the main categories of database systems:

- Relational Databases

-These databases store data in tables and use SQL for data management. They are known for their ACID (Atomicity, Consistency, Isolation, Durability) properties, ensuring reliable transactions. Examples include MySQL and PostgreSQL. - NoSQL Databases

-NoSQL databases are designed for large sets of distributed data and can handle various data formats, such as key-value pairs, documents, graphs, or wide-column stores. They provide scalability and flexibility. Notable examples are MongoDB and Cassandra. - Graph Databases

-These databases focus on the relationships between data points, utilizing graph structures with nodes and edges. They are particularly useful in scenarios where relationships are as important as the data itself. Neo4j is a prime example.

Key Features and Differences

When evaluating popular database systems, it is important to consider their key features and differences. Each system has unique attributes that make it suitable for certain applications. The following table summarizes these aspects:

| Database System | Type | Key Features |

|---|---|---|

| MySQL | Relational | Open-source, strong ACID compliance, widely used, flexible storage engines. |

| PostgreSQL | Relational | Open-source, advanced features, extensibility, support for JSON and XML. |

| MongoDB | NoSQL | Document-based, schema-less, horizontal scaling, rich querying capabilities. |

| Cassandra | NoSQL | Highly scalable, decentralized architecture, suitable for large amounts of data. |

| Neo4j | Graph | Intuitive graph model, optimized for traversing relationships, powerful querying language. |

Factors to Consider When Choosing a Database System

Several factors should guide the selection of a database system for data migration. Understanding these considerations can help ensure the right fit for application requirements:

- Data Structure

-The type of data being stored (structured, semi-structured, unstructured) can dictate the choice of database system. - Scalability

-Evaluate whether the database can handle the expected growth in data volume and user load. - Performance

-Different systems have varying performance characteristics based on use case, such as read-heavy or write-heavy workloads. - Transaction Support

-Consider whether ACID compliance is necessary for the application’s transactional integrity, as some NoSQL options may compromise it for performance. - Community and Support

-A strong community and robust documentation can be invaluable for troubleshooting and support during migration.

Data Migration Techniques: How To Migrate Data Between Different Database Systems

Data migration is a critical process that involves transferring data between different systems or formats. To ensure a smooth transition, it’s crucial to understand the various methods available for data migration, each with its unique advantages and challenges. This section delves into the primary data migration techniques, providing clarity on their applications and effectiveness.

In the ever-evolving landscape of business, small businesses are increasingly leveraging data to make informed decisions. The latest trends in business intelligence for small businesses highlight the importance of actionable insights, cloud-based solutions, and user-friendly analytics tools, allowing these enterprises to compete effectively in the market.

ETL (Extract, Transform, Load)

ETL is a widely adopted method in data migration that involves three key steps: extracting data from source systems, transforming it into a suitable format, and loading it into the target system. This technique is particularly useful for integrating data from multiple sources into a centralized system, often used in data warehousing.

Database Replication

Database replication is a method that involves copying and maintaining database objects in multiple databases. This technique is essential for ensuring data availability and redundancy. It can be synchronous or asynchronous, depending on the requirement for real-time data consistency.

Data Export/Import

Data export/import techniques involve extracting data from a source database into a standard format, such as CSV or JSON, and then importing it into the target database. This method is straightforward and can be effective for smaller datasets or when migrating between similar database systems.

Comparison of Data Migration Techniques

Understanding the pros and cons of different data migration techniques can guide organizations in selecting the right approach for their needs. The following table summarizes the advantages and disadvantages of ETL, database replication, and data export/import methods.

| Technique | Pros | Cons |

|---|---|---|

| ETL |

|

|

| Database Replication |

|

|

| Data Export/Import |

|

|

Best Practices for Data Integrity During Migration

Ensuring data integrity throughout the migration process is paramount. Adopting best practices can minimize risks and enhance reliability. Key recommendations include:

-

Perform thorough testing of the migration process before executing it on live data.

Understanding database management is crucial for any business, and one key aspect is learning about data organization. Exploring the different types of database normalization techniques can help in reducing redundancy and improving data integrity, ultimately leading to more efficient database systems.

- Utilize data validation techniques to verify that the data remains accurate and complete after migration.

- Maintain detailed logs of the migration process to track changes and identify discrepancies.

- Implement rollback procedures to revert to the original state in case of errors or failures during migration.

- Engage stakeholders in the planning phase to ensure that all relevant data contexts are considered.

Tools and Software for Data Migration

Data migration is a critical process in the lifecycle of database management, and the selection of the right tools can significantly influence the success of migration projects. Various software solutions are designed to facilitate data migration between different systems, ensuring efficiency, accuracy, and the ability to handle large volumes of data. This section provides an overview of several popular tools used for data migration, along with their features and capabilities.

Overview of Popular Data Migration Tools

Several tools have emerged in the market to assist organizations in streamlining their data migration efforts. Below are three notable examples along with their key features:

- Talend: Talend is an open-source data integration tool that offers robust ETL (Extract, Transform, Load) capabilities. It allows users to connect to multiple data sources and perform complex transformations in an intuitive manner. Talend also supports cloud and on-premises deployments, making it versatile for various business environments.

- Apache NiFi: Apache NiFi is a powerful data flow automation tool that provides real-time data ingestion and processing. With its user-friendly graphical interface, users can design data flows without extensive coding. NiFi supports various data formats and protocols, ensuring seamless data migration across different systems.

- AWS Data Migration Service: Amazon’s AWS Data Migration Service allows users to migrate databases to AWS quickly and securely. It supports homogeneous and heterogeneous migrations, with features that allow for minimal downtime during the process. The service can handle both data replication and data transformation, making it a comprehensive solution for enterprises moving to the cloud.

Features and Capabilities of Selected Tools, How to migrate data between different database systems

When evaluating data migration tools, it’s essential to consider specific features that align with project requirements. The following aspects are crucial for effective data migration:

- Scalability: The ability to scale the migration process to accommodate varying data volumes is vital. Tools like Talend and AWS Data Migration Service support large-scale migrations, facilitating business growth.

- Data Transformation: Efficient data transformation capabilities enable organizations to preprocess data during migration. Apache NiFi excels in this area with its real-time processing capabilities, allowing for data cleansing and enrichment on-the-fly.

- Security Features: Security remains a top priority in data migration. Tools should offer encryption, access controls, and compliance with data protection regulations. AWS Data Migration Service, for instance, provides robust monitoring and security measures to safeguard data during transfer.

Checklist for Selecting the Right Migration Tool

Choosing the appropriate data migration tool requires careful consideration of several criteria. The following checklist can guide organizations in making an informed decision:

- Compatibility with existing data sources and targets.

- Ease of use and availability of user support.

- Cost of implementation and ongoing maintenance.

- Performance metrics, including speed and reliability.

- Customization options to fit specific migration needs.

- Community support and documentation availability.

Preparing for Data Migration

Before initiating a data migration, it is essential to prepare thoroughly to ensure a smooth transition. This stage involves assessing the quality of the existing data, creating a comprehensive mapping document, and adopting strategies to minimize downtime during the migration process. Proper preparation lays the foundation for successful migration, reducing potential issues and ensuring operational continuity.

Assessing Data Quality

Assessing the quality of data prior to migration is critical as it directly impacts the integrity and usability of the data in the new system. Poor data quality can lead to failed migrations, data corruption, and inefficient operations post-migration. Key steps for assessing data quality include:

- Data Profiling: Analyze the data to understand its structure, content, and constraints. Ensure that data types are consistent and conform to expected formats.

- Identify Inconsistencies: Look for duplicate records, missing values, and anomalies that could complicate the migration process.

- Data Validation: Implement validation rules to check for data accuracy, ensuring that the information is both complete and correct.

- Data Cleansing: Remove or rectify any errors identified during the profiling phase. This may involve standardizing formats, filling in missing values, or eliminating duplicates.

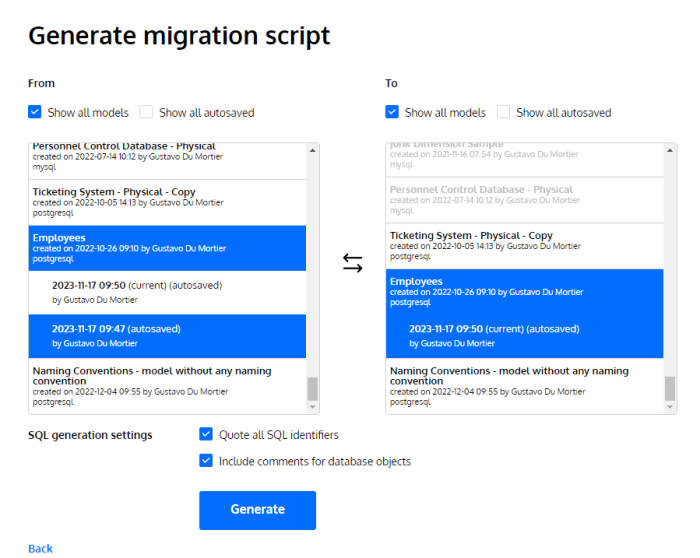

Creating a Data Mapping Document

A data mapping document is a crucial component of the migration process. It serves as a blueprint that Artikels how data from the source system corresponds to the target system. A well-structured mapping document aids in maintaining data integrity and ensuring a smooth migration. The creation of this document involves several steps:

- Identify Source and Target Systems: Clearly define the databases involved, noting the specific tables and fields that will be migrated.

- Establish Mappings: For each field in the source system, determine the corresponding field in the target system. Include details such as data types, constraints, and any transformations that may be necessary.

- Document Dependencies: Note any dependencies between data sets; this helps in understanding the relationships and order of migration.

- Review and Validate: Share the mapping document with stakeholders for feedback and validation to ensure accuracy and completeness before moving forward.

Minimizing Downtime

Minimizing downtime during the data migration process is critical for maintaining business operations and ensuring user satisfaction. Effective strategies to reduce downtime include:

- Incremental Migration: Rather than migrating all data at once, consider an incremental approach. Migrate smaller batches during off-peak hours to limit impact on users.

- Data Replication: Utilize data replication techniques to keep the target system updated in real time, allowing for a quicker switchover once the migration is complete.

- Testing Environment: Set up a testing environment to conduct trial runs of the migration. Identify potential issues and address them without affecting the live environment.

- Communication Plan: Inform stakeholders and users about the migration schedule and potential impacts. Providing clear updates can help manage expectations and reduce frustration.

Post-Migration Steps

Successfully migrating data to a new database system is only half the battle. The post-migration phase is critical for ensuring that the data remains intact and that the new system operates efficiently. This stage involves thorough validation of the migrated data, optimization of system performance, and identification of any issues that may arise in the new environment. Addressing these aspects meticulously can safeguard the integrity and usability of the database.

Validation of Migrated Data

Validating migrated data is essential to confirm that it has been transferred accurately and performs as expected in the new database system. Any discrepancies can lead to significant operational issues, making this step a priority.To validate migrated data effectively, follow these steps:

- Conduct Data Comparison: Compare key records between the old and new systems to ensure data consistency. Use checksum or hash functions for accuracy.

- Verify Data Types: Ensure that all data types are correctly mapped and that values fall within expected ranges.

- Check Referential Integrity: Validate foreign key constraints and relationships to confirm that data integrity is maintained.

- Run Test Queries: Execute typical queries on the new database to evaluate performance and accuracy, comparing results with those from the old system.

- Review Application Functionality: Ensure that applications interacting with the database are functioning correctly after migration.

Optimization of Performance

Post-migration optimization is vital to enhance the performance of the new database system. A streamlined database can significantly improve query response times and overall user satisfaction.Steps for optimizing performance include:

- Analyze Query Performance: Utilize database performance monitoring tools to identify slow-running queries and optimize them through indexing or rewriting.

- Implement Proper Indexing: Create indexes on frequently accessed columns to expedite data retrieval operations.

- Reorganize and Rebuild Indexes: Regularly maintain indexes to enhance query performance and reduce fragmentation.

- Adjust Configuration Settings: Modify database configuration settings such as memory allocation and connection limits to match workload requirements.

- Perform Regular Backups: Establish a routine backup schedule to prevent data loss and ensure system reliability.

Common Post-Migration Issues and Solutions

After migration, various issues can surface that may impact the new database’s effectiveness. Identifying these common challenges early allows for quicker resolutions and minimizes downtime.Some of the common post-migration issues include:

- Data Inconsistencies: Differences in data formats or corruption during transfer can lead to inconsistencies. Re-validation and correction processes are essential.

- Slow Performance: If the new database operates slower than expected, it may require performance tuning as Artikeld earlier.

- Application Errors: Applications may experience errors if they rely on database structures that have changed. Testing and debugging will be necessary.

- Security Issues: New configurations may expose security vulnerabilities. Regular audits and updates are crucial to address these concerns.

- Integration Challenges: Existing systems may have difficulties interfacing with the new database. Reviewing and updating integration protocols can resolve these issues.