With How to optimize database performance for large applications at the forefront, this discussion sheds light on a critical aspect of modern technology that can make or break user experience and application efficiency. As applications grow in size and complexity, ensuring that the underlying database performs optimally becomes essential. By understanding the factors affecting database performance, identifying common bottlenecks, and applying effective optimization techniques, developers can significantly enhance the responsiveness and reliability of their systems.

This guide delves into the intricacies of database performance, exploring vital topics such as efficient query writing, proper configuration, and the role of hardware in performance enhancement. With practical insights and actionable strategies, readers will be equipped to tackle the challenges of large-scale applications and maintain a seamless user experience.

Understanding Database Performance

Database performance is a critical aspect of large applications, influencing user experience and operational efficiency. The factors affecting it range from hardware specifications to the design of the database schema itself. A comprehensive understanding of these factors is essential for optimizing performance and ensuring that applications can handle vast amounts of data without significant delays or issues.Several elements play a crucial role in determining database performance.

These include the database design, server hardware, indexing strategies, and the query optimization techniques used. Additionally, the size and complexity of the data also contribute significantly to how well a database performs under load. It’s imperative to recognize and address these factors to prevent slowdowns and ensure smooth operation.

Beginning a new initiative can be daunting, but understanding how to start a business intelligence project is essential for success. Identifying clear objectives and stakeholder requirements is a critical first step. Once these elements are established, teams can focus on gathering data and selecting the right tools, laying a solid foundation for informed decision-making and strategic growth.

Factors Affecting Database Performance

To optimize database performance, it’s important to understand the various factors that can impact it. These factors can be categorized as follows:

- Hardware Resources: The CPU, memory, and storage speed all influence database performance. For example, SSDs provide faster read/write speeds compared to traditional HDDs, leading to quicker data access and improved performance.

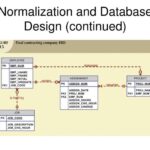

- Database Schema Design: A well-structured schema can significantly enhance performance. Normalization reduces data redundancy, while denormalization can improve read speeds by minimizing joins in complex queries.

- Query Efficiency: Poorly written queries can lead to excessive resource consumption. Using EXPLAIN plans can help developers identify inefficiencies in their SQL queries and optimize them accordingly.

- Concurrency and Load: High levels of simultaneous user activity can cause contention for resources. Implementing connection pooling can help manage this load effectively.

Common Performance Bottlenecks

In large applications, certain bottlenecks often emerge, hindering optimal database performance. Recognizing and addressing these bottlenecks is crucial for maintaining an efficient system. Some of the most common include:

- Slow Queries: Queries that take too long to execute can be the result of unoptimized SQL, leading to performance degradation.

- Indexing Issues: Missing or poorly implemented indexes can slow down data retrieval, causing delays in response times.

- Locking and Blocking: When multiple transactions attempt to access the same data simultaneously, this can result in lock contention, causing delays.

- Network Latency: High latency in data transmission between the application and the database server can affect the overall performance, particularly in distributed systems.

Significance of Indexing

Indexing is one of the most potent tools for improving database performance. It allows the database to locate and access the data more quickly than scanning entire tables. The importance of indexing can be summarized in the following points:

- Faster Query Performance: With proper indexes, the database can retrieve data much faster, which is essential for applications that require quick access to information.

- Reduced I/O Operations: Indexes minimize the amount of data the database engine needs to read, reducing the disk I/O operations significantly.

- Improved Sorting and Filtering: Indexes facilitate quicker sorting and filtering of data, which can enhance the performance of operations involving ORDER BY and WHERE clauses.

- Trade-offs and Maintenance: While indexes improve read performance, they can slow down write operations due to the overhead of maintaining the indexes. It’s crucial to balance between read and write performance when designing the indexing strategy.

“Indexing is a critical component of database optimization; it transforms the way data is accessed, impacting the overall performance significantly.”

Techniques for Optimizing Database Queries

Optimizing database queries is essential for enhancing the performance of large applications. Efficient SQL queries can reduce response times, improve user experience, and lower resource consumption on database servers. By employing various techniques, developers can ensure that their applications run smoothly and efficiently, even under heavy load.A critical aspect of optimizing database performance lies in writing efficient SQL queries. This involves understanding how to structure queries to minimize execution time and resource usage.

Additionally, query profiling and tuning are vital steps that help identify performance bottlenecks and allow developers to make precise adjustments to their SQL code.

Methods for Writing Efficient SQL Queries

To write efficient SQL queries, developers should follow several best practices that facilitate optimal performance. These methods help reduce execution time and improve the overall efficiency of database operations.

- Select Only Required Columns: When retrieving data, avoid using “SELECT

-“. Instead, specify only the columns that are necessary for the task. This minimizes the amount of data returned, leading to faster queries. - Use Proper Indexing: Implement indexes on columns that are frequently searched or used in JOIN operations. Indexes can significantly speed up data retrieval. For example:

CREATE INDEX idx_customer_name ON customers (name);

- Utilize Joins Wisely: When combining tables, prefer INNER JOIN when only matching records are needed, as it is faster than OUTER JOIN. Optimize the join conditions to ensure they are based on indexed columns.

- Avoid Subqueries When Possible: Replace subqueries with JOINs whenever feasible. This often results in better performance because databases can optimize JOIN operations more effectively than nested queries.

- Limit Result Sets: Use the LIMIT clause to restrict the number of returned rows, which can reduce processing time and resource usage. For instance:

SELECT

– FROM orders LIMIT 10;

Importance of Query Profiling and Tuning

Query profiling and tuning are essential practices that enable developers to analyze and enhance the performance of their SQL queries. By employing these techniques, developers can identify slow queries and make necessary adjustments to improve efficiency.Query profiling tools, such as the EXPLAIN statement in SQL, provide insights into how the database executes a query. This information is crucial for understanding which parts of a query may be causing bottlenecks.Additionally, regularly tuning queries based on profiling results can lead to substantial performance improvements.

For example, if a query is found to be performing a full table scan, adding indexes or restructuring the query can help decrease execution time significantly.

Examples of Query Optimization Techniques

Real-world scenarios demonstrate how specific optimization techniques can lead to improved database performance. Here are a few examples along with code snippets to illustrate effective practices.

- Using Derived Tables: Instead of performing complex calculations in the main query, leverage derived tables to simplify your main queries:

SELECT avg_order_value

FROM (SELECT customer_id, AVG(order_value) as avg_order_value

FROM orders

GROUP BY customer_id) as derived; - Batching Inserts: When inserting multiple rows, using a single INSERT statement with multiple values can reduce overhead:

INSERT INTO products (name, price) VALUES

(‘Product A’, 10),

(‘Product B’, 15),

(‘Product C’, 20); - Utilizing Query Caching: Enable query caching in the database to store the results of frequently executed queries, reducing the need for repeated calculations. Ensure cache invalidation is handled correctly to maintain data integrity.

Database Configuration and Management: How To Optimize Database Performance For Large Applications

Optimizing database performance for large applications requires a comprehensive approach to configuration and management. Proper database settings can significantly affect performance, scalability, and overall system stability. This section delves into essential configuration settings, regular maintenance tasks, and best practices for managing database connections effectively.

Optimal Database Configuration Settings

Configuring a database for large-scale applications involves numerous parameters that can enhance performance. Key settings include:

Memory Allocation

Ensuring that adequate RAM is allocated to the database server can improve performance. A general rule is to allocate around 70-80% of the available memory to the database management system (DBMS). This setting reduces disk I/O operations and speeds up query processing.

Connection Limits

Set limits for the number of concurrent connections to the database. Too many active connections can lead to exhaustion of resources. Typically, a limit can be set based on the server’s capacity and the nature of the application usage.

Indexing

Use indexes judiciously to improve query performance. While indexes can speed up read operations, they can slow down write operations. Therefore, a balanced approach should be taken when creating indexes.

Caching

Use caching mechanisms to store frequently accessed data in memory, reducing the need for repeated queries. Adjust cache sizes based on the workload and application usage patterns.

“Proper database configuration can drastically reduce latency and improve transaction throughput.”

Checklist for Regular Database Maintenance Tasks

Regular maintenance of the database is critical to ensure optimal performance and uptime. The following checklist Artikels essential tasks that should be performed routinely:

Backup and Recovery

Schedule regular backups to prevent data loss. Verify backup integrity and have a disaster recovery plan in place.

Performance Monitoring

Utilize monitoring tools to track database performance metrics such as query response times, CPU usage, and disk I/O activity.

Index Maintenance

Regularly update and rebuild indexes to remove fragmentation and improve query performance.

Data Purging

The role of artificial intelligence in business intelligence is becoming increasingly crucial as organizations seek to enhance their data-driven strategies. AI tools can analyze vast datasets, uncover patterns, and provide actionable insights, making decision-making processes more efficient and effective. By integrating AI into business intelligence, companies can not only streamline operations but also gain a competitive edge in their respective markets.

Implement a strategy for archiving or purging obsolete data to free up storage and enhance query speed.

Configuration Review

Periodically review and adjust configuration settings based on changes in workload and application demands.

“Regular maintenance is key to sustaining database performance and preventing potential issues.”

Best Practices for Database Connection Pooling

Connection pooling is critical in managing database connections efficiently, especially in large applications. Effective connection pooling can lead to reduced latency and better resource management. Best practices include:

Pool Size Configuration

Set an optimal size for the connection pool based on expected traffic and load patterns. Monitor performance to adjust sizes dynamically as needed.

Connection Timeout Settings

Define appropriate timeout settings to ensure that idle connections do not consume resources unnecessarily.

Pooling Strategy

Implement a strategy that efficiently manages opening and closing connections based on usage patterns. Consider using a background thread to validate connections periodically.

Error Handling

Ensure robust error handling in connection pooling logic to gracefully manage failures and resource leaks.

“Effective connection pooling minimizes overhead and enhances application responsiveness.”

Hardware and Infrastructure Considerations

The performance of a database is intrinsically linked to its underlying hardware and infrastructure. As applications scale, understanding the implications of different hardware configurations becomes essential for maintaining efficiency and speed. A well-optimized infrastructure can significantly enhance database response times, support higher transaction volumes, and ultimately improve user experiences.

Impact of Different Hardware Configurations

Various hardware configurations can lead to significant differences in database performance. Here’s a detailed look at how specific components influence overall efficiency:

CPU

The processing power of the CPU directly impacts query execution times. Multi-core processors can handle more simultaneous operations, reducing bottlenecks in high-load environments.

Memory (RAM)

Sufficient RAM is crucial for in-memory database operations. The more data that can be cached in memory, the fewer read operations need to hit slower disk storage, leading to faster access times.

Storage Type

The choice between HDDs and SSDs plays a pivotal role. SSDs offer much faster read/write speeds compared to traditional hard drives, which can drastically cut down on data retrieval times in busy databases.

Network Speed

For distributed databases, the speed of the network can either facilitate or hinder performance. Higher bandwidth allows for quicker data transfers, essential for maintaining speed in cloud-based solutions.

Role of Cloud Solutions in Scaling Database Performance

Cloud technology has revolutionized how databases are managed and scaled. It allows organizations to dynamically adjust resources based on demand without the need for physical hardware upgrades. The benefits of cloud solutions include:

Elasticity

Cloud solutions enable automatic scaling, allowing databases to expand or contract resources based on real-time traffic. This elasticity prevents performance degradation during peak loads.

Managed Services

Many cloud providers offer managed database services that handle maintenance, backups, and updates, thus freeing up resources for development and optimization.

Global Distribution

Cloud services can deploy databases across multiple geographical locations, reducing latency for users and improving accessibility for global applications.

Comparison of Database Management Systems

When selecting a Database Management System (DBMS), different systems exhibit varying performance features based on their architecture and optimization capabilities. Below is a comparative table highlighting key performance attributes of various popular DBMSs:

| Database Management System | Storage Type | Performance Features | Scalability | Use Cases |

|---|---|---|---|---|

| MySQL | HDD/SSD | High concurrency, InnoDB for transactions | Vertical and horizontal scaling | Web applications, e-commerce |

| PostgreSQL | HDD/SSD | Advanced indexing, full-text search | Vertical scaling with partitioning | Complex applications, data analytics |

| MongoDB | SSD preferred | Document-oriented storage, horizontal scaling | Dynamic sharding | Real-time analytics, big data |

| Microsoft SQL Server | HDD/SSD | Optimized for transactions, robust performance tuning | Vertical scaling with enterprise support | Enterprise-level applications, financial data |

| Oracle Database | HDD/SSD | Advanced optimizations, partitioning, clustering | Highly scalable, enterprise-level support | Large enterprises, mission-critical applications |

Monitoring and Continuous Improvement

In the realm of large applications, the efficiency of database performance is not a static quality but a dynamic one that requires ongoing scrutiny and refinement. To ensure optimal performance, organizations must employ effective monitoring tools and strategies for continuous improvement. This section delves into the essential tools for assessing database performance and Artikels a robust approach for implementing consistent enhancements.

Essential Tools for Monitoring Database Performance

Monitoring the performance of a database is crucial for identifying bottlenecks and ensuring a seamless user experience. Several tools are pivotal in providing insights into database behavior and performance metrics. The following tools are widely recognized for their effectiveness:

- Prometheus: An open-source monitoring tool that collects metrics from configured targets at specified intervals, allowing for real-time alerting and visualization.

- New Relic: A comprehensive performance monitoring solution that provides detailed insights into application performance, including database response times and error rates.

- SolarWinds Database Performance Analyzer: A tool that offers deep performance insights and identifies query performance issues, enabling DBAs to take proactive measures.

- pgAdmin: Specifically for PostgreSQL, pgAdmin provides detailed statistics and monitoring capabilities that help track database performance trends.

- Oracle Enterprise Manager: A powerful tool for Oracle databases that enables real-time monitoring and automated insights into database performance metrics.

Strategy for Implementing Continuous Performance Improvement, How to optimize database performance for large applications

A structured approach is essential for fostering a culture of continuous performance improvement. Organizations should adopt a strategy that includes the following key components:

- Regular Performance Audits: Conducting periodic audits to evaluate database performance helps in pinpointing areas needing enhancement. These audits should include a review of query execution plans and indexing strategies.

- Feedback Loop: Establishing a mechanism for feedback from users and developers can help in identifying performance issues that may not be evident through automated monitoring tools.

- Benchmarking: Implementing benchmarking against industry standards or similar applications provides a reference point for assessing database performance and identifying improvement opportunities.

- Agile Optimization: Embracing an agile methodology allows for incremental changes and faster iterations in performance tuning, enabling teams to respond swiftly to emerging issues.

Gathering and Analyzing Performance Metrics Effectively

The ability to gather and analyze performance metrics is paramount in the quest for database efficiency. Effective strategies include:

- Comprehensive Metric Collection: Utilize monitoring tools to capture a wide array of metrics, such as query response times, CPU usage, memory utilization, and disk I/O statistics. Each of these metrics plays a critical role in understanding overall system health.

- Data Visualization: Leverage data visualization tools to create dashboards that present performance metrics intuitively. This helps stakeholders quickly grasp performance trends and identify anomalies.

- Automated Alerts: Setting up automated alerts for critical thresholds ensures that performance degradations are identified and addressed promptly, reducing the potential for significant downtime.

- Historical Data Analysis: Analyzing historical performance data enables the identification of trends and patterns, guiding future optimization efforts and strategic planning.

“Continuous improvement is better than delayed perfection.” — Mark Twain